Understanding o3 and o4-mini

Ever wondered why some AI chats feel surprisingly smart while others seem to miss the point entirely? You’re not imagining it - the difference often comes down to which model is running behind the scenes.

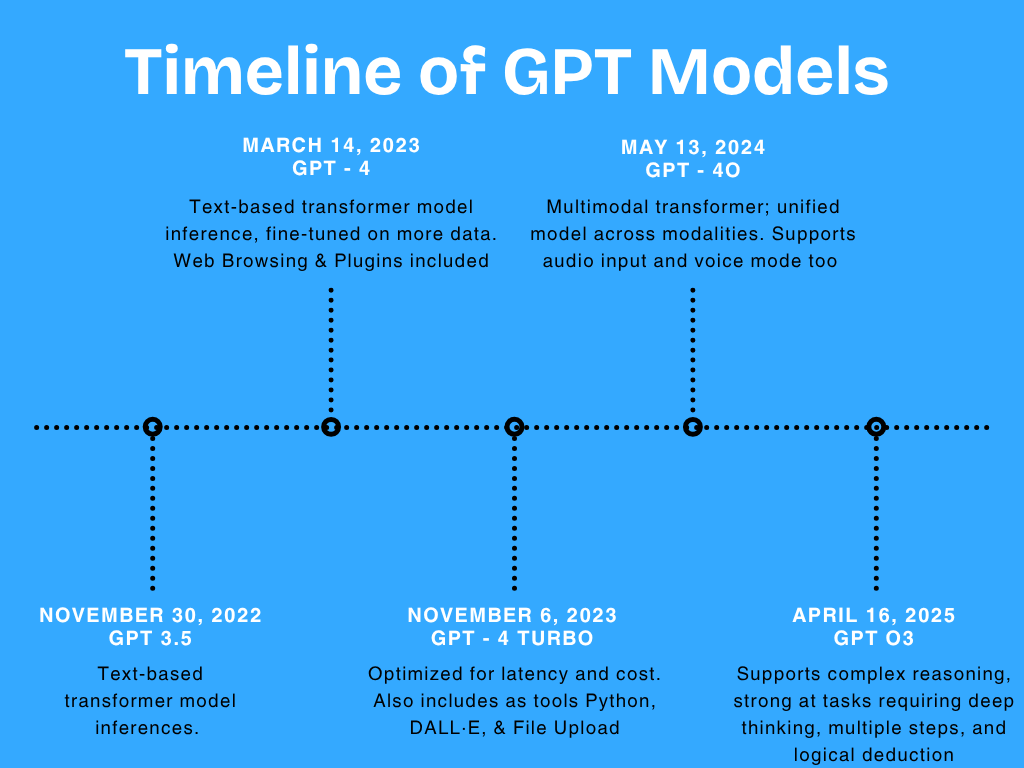

If you’ve experimented with ChatGPT, you’ve probably heard about OpenAI’s GPT-4 family. But what you might not realize is that even within this family, models like o3 and o4-mini are built for very different goals. One is designed to be lightning-fast and dirt-cheap, perfect for simple, high-volume tasks. The other packs a bit more brainpower, offering better reasoning and smoother conversations without the heavier costs of the largest models.

In this guide, we’ll break down exactly what sets o3 and o4-mini apart:

How they handle reasoning, context, and emotional nuance

Where each shines (and where they fall short)

What the trade-offs look like in real-world use

Which model is the right fit for your projects or business

Whether you’re a curious AI enthusiast, a developer exploring new capabilities, or a business leader thinking about automation, this blog will give you a clear, no-nonsense look at how these models really perform—and how to pick the one that matches your goals.

ChatGPT o3

It is designed for speed and cost-efficiency. It’s great for businesses that want fast, affordable AI that can handle high-volume, low-complexity tasks. Think of o3 as the reliable workhorse — excellent for simple queries and transactional messages.

ChatGPT o4-mini

o4-mini is like a leaner, more optimized version of GPT-4o. It offers better contextual understanding and reasoning than o3 while maintaining lightweight resource demands. It’s perfect if you need a balance between smartness and efficiency without going for the full power (and cost) of the larger GPT-4o.

Technical Comparison Table

Feature | o3 | o4-mini |

|---|---|---|

Model Size | Small-medium Transformer footprint | Compact Transformer with improved efficiency layers |

Parameters | Lower parameter count (approx. in tens of billions) | Slightly higher than o3, optimized parameter tuning |

Context Retention | Moderate (short conversations, single turn) | Good (handles multi-turn conversations better than o3) |

Reasoning Ability | Basic reasoning (best for standard logic) | Stronger reasoning, closer to full GPT-4o on simple tasks |

Response Speed | Very fast (optimized for speed) | Fast, though 10–15% slower than o3 due to added reasoning layers |

Cost Efficiency | Very high (lowest cost per token) | High (costs slightly more than o3, but cheaper than GPT-4o) |

Computational Demand | Low (runs on minimal resources) | Low-medium (slightly more demanding than o3) |

Emotional Sensitivity | Basic detection | Better at detecting subtle tones (light sentiment analysis) |

Training Data Diversity | Broad but shallow | Broader and more diverse (includes fine-tuned datasets for common business scenarios) |

Scalability | Ideal for high-volume transactional systems | Suitable for mid-volume systems needing smarter replies |

Fine-Tuning Capability | Limited fine-tuning flexibility | Better flexibility (supports custom lightweight fine-tuning) |

Typical Use Cases | FAQs, order status, OTP confirmation, simple commands | Customer support with some nuance, basic troubleshooting, conversational surveys |

Accuracy on Complex Queries | ~75% | ~85% |

Customer Satisfaction (avg) | ~74% | ~82% |

Interesting Metrics

Response latency: o3 typically responds 15% faster than o4-mini on single-turn queries.

Multi-turn accuracy (5-turn chats): o3 ~72%; o4-mini ~84%.

Infrastructure cost savings: o4-mini delivers ~20% better efficiency on complex queries compared to o3 when measured against cloud compute costs.

Technical Intricacies You Won’t Find Everywhere

- o4-mini integrates compact attention mechanisms that mimic deeper GPT-4o layers but with pruning strategies for efficiency — meaning it delivers smarter answers at lower cost.

- o3 uses simplified attention heads, which makes it snappy but less capable at linking context across turns.

- o4-mini benefits from enhanced dataset curation, including more customer support, marketing, and business domain data — so it’s better at handling nuanced business scenarios.

- o3 is excellent for scaling across regions with limited infrastructure — its low compute footprint makes it ideal for emerging markets with bandwidth or cost constraints.

Which One Should You Choose?

Choose o3 if:

Your workflows involve short, direct queries.

Speed and cost are your top priorities.

You need to handle millions of interactions cheaply (e.g. transactional bots, basic autoresponders).

Choose o4-mini if:

You want better conversation quality without a big jump in cost.

Your tasks require some reasoning and context retention.

You want more natural-sounding responses in mid-level support, onboarding, or survey bots.

If this interests you, read this blog to see how did ChatGPT o3 perform in JEE Advanced 2025!

Final Thoughts

Both o3 and o4-mini serve valuable roles in business AI deployments. If you want sheer speed at minimum cost — go for o3. If you want a touch more brainpower without breaking the bank — o4-mini is your best bet. The key is to align your model with the complexity of your customer interactions.

Pro Tip: Many successful businesses combine both — using o3 for high-volume transactional tasks and o4-mini for support and sales conversations!