In the past year, businesses of every size, especially emerging D2C brands, have been rethinking how they engage with customers. What used to be the domain of scripted chatbots and static FAQs has evolved into dynamic, AI-driven conversations that feel almost human.

But not all AI models are created equal.

So we asked the real question: Which LLMs are best suited for building accurate, fast, and cost effective customer support chatbots today?

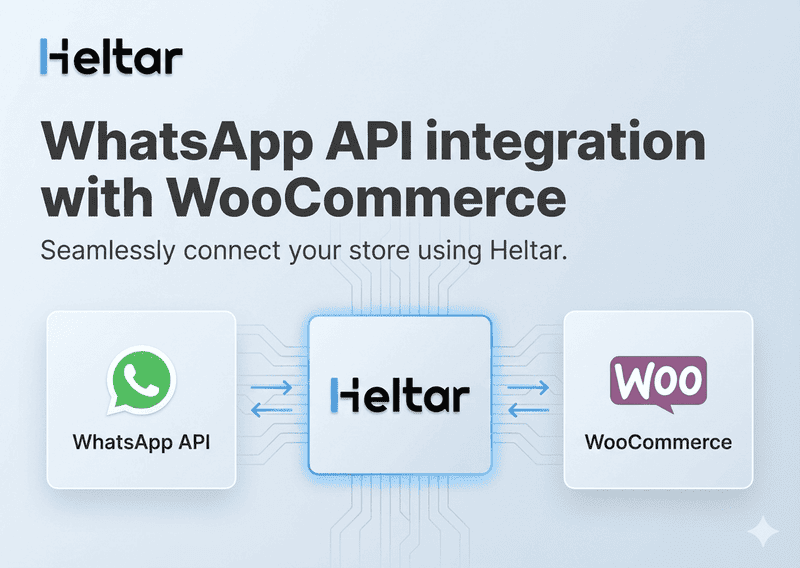

At Heltar, where we help businesses automate conversations via WhatsApp Business API, we’ve seen firsthand how the choice of a Large Language Model (LLM) can make or break the customer support experience. We tested the most current and capable LLMs for this exact use case. Here's what we found.

Why This Matters

Customer support isn’t about flashy answers. It’s about speed, accuracy, empathy, and staying grounded in your business’s knowledge base. Whether you're solving refund queries or onboarding users via WhatsApp, the model must:

- Respond within seconds

- Avoid hallucinations (making stuff up)

- Follow instructions precisely (e.g., JSON outputs)

- Stick to your business FAQs or knowledge base

- Fit your budget - without tradeoffs on quality

- So we benchmarked the most powerful and relevant models in 2025, focusing on real-world chatbot deployment.

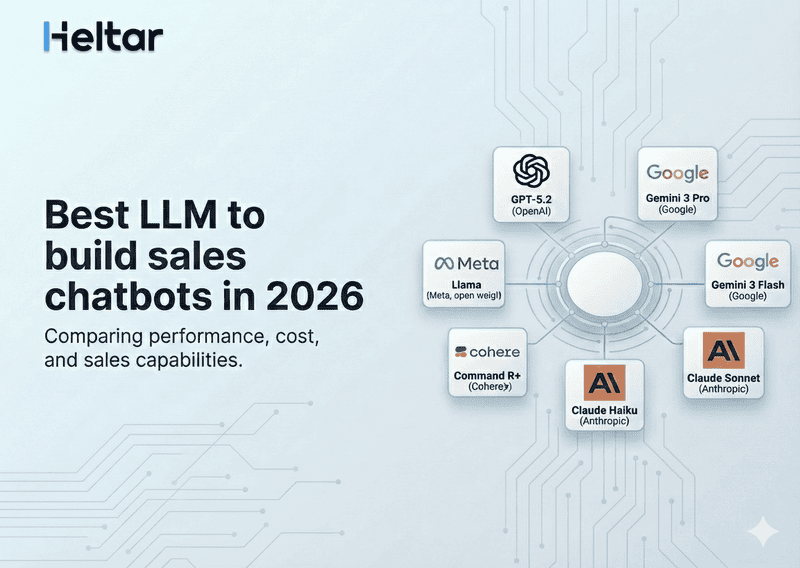

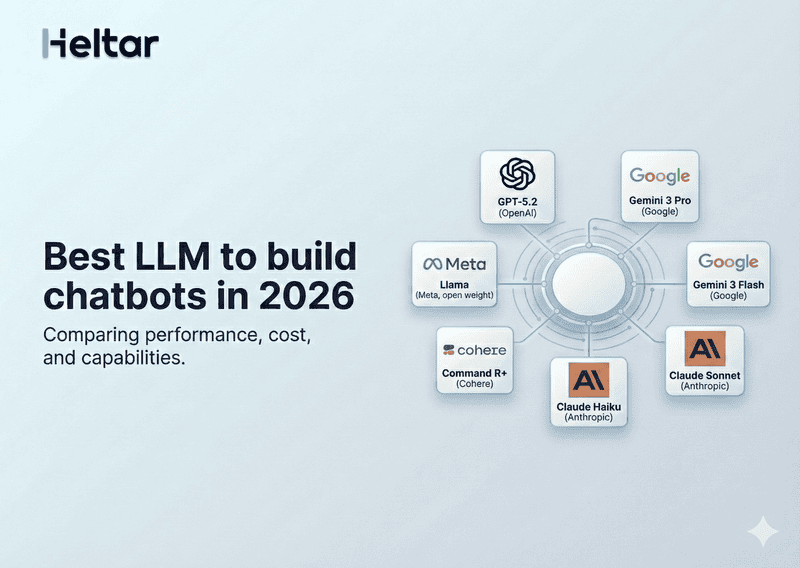

Models We Evaluated

We focused on 6 of the most production-ready models for building customer support bots:

Model | Provider(s) | Type | Open Source? |

|---|---|---|---|

GPT-4o | OpenAI | Proprietary | ❌ |

Claude 3 Opus | Anthropic | Proprietary | ❌ |

Gemini 1.5 Pro | Google DeepMind | Proprietary | ❌ |

Mistral 7B/8x | Mistral AI + providers | Open-source | ✅ |

Meta LLaMA 3 | Meta | Open-source | ✅ |

Command R+ | Cohere | Fine-tuned OSS | ✅ |

Our Evaluation Criteria

We focused on 6 parameters critical to WhatsApp customer service automation:

Criteria | Why It Matters |

|---|---|

Accuracy | Can it answer correctly using your documents and instructions? |

Speed (Latency) | Is the response quick enough for WhatsApp users expecting instant replies? |

Instruction following | Can it return structured formats like buttons, URLs, or JSON as required? |

Hallucination Control | Does it invent information when uncertain? |

Cost Efficiency | Is it affordable to scale across thousands of conversations? |

Language & Tone | Can it adapt to formal Hindi, Hinglish, or region-specific tones in India? |

Performance Summary (Score: 1–5)

Model | Accuracy | Speed | Hallucination Control | Instruction Following | Cost Efficiency |

|---|---|---|---|---|---|

GPT-4o | 5 | 4 | 5 | 5 | 2 |

Claude 3 Opus | 5 | 3 | 5 | 5 | 2 |

Gemini 1.5 Pro | 4.5 | 3.5 | 4.5 | 4 | 2.5 |

Mistral 7B | 3.8 | 5 | 3.5 | 3.5 | 5 |

LLaMA 3 (8B) | 4 | 4.5 | 4 | 3.5 | 5 |

Command R+ | 4.2 | 5 | 4 | 4.5 | 4 |

Key Insights

1. GPT-4o is still the gold standard—but costly

If you're running high value customer conversations - say, for a D2C luxury brand or a financial product with regulatory oversight - GPT-4o is unmatched in reasoning, tone control, and hallucination prevention. It also handles multilingual prompts, structured outputs, and RAG flows with ease. But be prepared for 10x the token cost of open source models.

2. Claude 3 is emotionally intelligent, great for angry users

Claude 3 is particularly strong in tone and emotional context. For industries where customers are frustrated - like travel, ticketing, or internet outages - Claude responds empathetically and diplomatically. However, Claude models are slower and similarly expensive.

3. Mistral + RAG = Solid Value

The Mistral 7B and Mistral Mixtral (8x7B) models, when fine tuned on your domain and paired with a good RAG pipeline, offer incredible value. They're fast, extremely affordable, and competitive in accuracy for 80% of typical support queries. On WhatsApp, where sub-2s latency matters, this combo shines.

4. Command R+ punches above its weight

Cohere’s Command R+ is a fine tuned open model optimized for RAG and retrieval contexts. It offers one of the best blends of instruction - following, hallucination control, and cost; especially for Indian startups with lean budgets.

5. Meta LLaMA 3 shows promise, but not plug-and-play

LLaMA 3 (8B and 70B variants) has improved significantly in following instructions and maintaining context. But out-of-the-box, it still needs fine tuning or wrapper logic to handle specific support use cases, especially structured WhatsApp interactions.

Best Model Based on Business Type

Business Type | Recommended Model(s) | Why? |

|---|---|---|

Enterprise / BFSI / Healthcare | GPT-4o (via Azure), Claude 3 Opus | Highest factual accuracy, auditability, compliance |

SaaS or D2C Mid-market | Command R+ or Gemini 1.5 (if GCP infra already exists) | Balanced performance with moderate budget |

Startup / Shopify Store / QSR | Mistral 7B, LLaMA 3 | Fastest response, lowest cost, reliable with RAG |

Our Take at Heltar

We’ve embedded LLMs into our WhatsApp chatbot stack across industries—from insurance and retail to ed-tech and travel. What we’ve found is this:

- You don’t always need GPT-4o to deliver great support.

- With strong RAG, domain fine-tuning, and structured prompting, Command R+ or Mistral can handle 80–90% of queries.

- Speed and cost matter more than benchmark wins when your customers are waiting on WhatsApp.

- That’s why we offer hybrid deployments: blending multiple LLMs based on the query type, fallback confidence, and business SLAs.

Want Help Picking the Right Model?

If you’re building a WhatsApp-based customer service chatbot and are confused by the LLM buzz, let’s simplify it for you.

- We benchmark and fine-tune models for your data

- Deploy it on your infra or ours

- Integrate with your existing WhatsApp API setup

- Add fallback, language localization, and analytics

Get in touch with Heltar to explore the smartest way to build your GenAI chatbot.

Because in 2025, support that doesn’t feel like support? That’s what customers remember.